New A.I. Robustness Testing Kit released from CEC’s LAiSR lab

Freely available to anyone in the A.I. security community, the testing kit allows security professionals to test A.I. models’ vulnerabilities and responses to attackers.

New A.I. Robustness Testing Kit released from CEC’s LAiSR lab

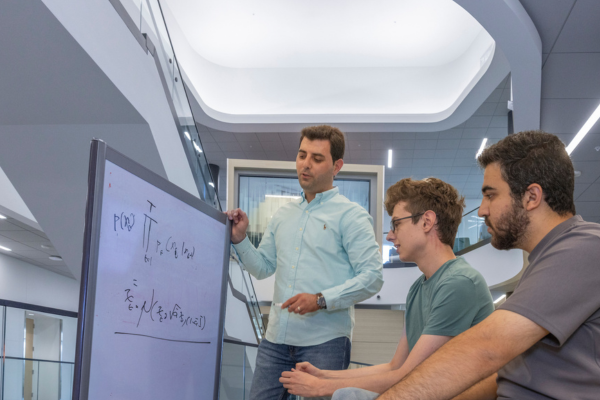

Samer Khamaiseh, assistant professor of Computer Science and Software Engineering, directs and leads the Laboratory of A.I. Security Research (LAiSR) at Miami University’s College of Engineering and Computing. Along with a team of graduate-level computer science students, Khamaiseh has produced an A.I. Robustness Testing Kit (AiR-TK) which is now freely available to anyone in the A.I. security community on GitHub. LAiSR’s AiR-TK testing framework, built on PyTorch, allows security professionals to test A.I. models’ vulnerabilities and responses to attackers in order to improve their defenses.

Currently, many in the A.I. security community use a method called adversarial training to defend against A.I. attacks. “To visualize how adversarial training works without getting into the math,” said Khamaiseh, adversarial training is like “learning from the devil.” In other words, security experts examine and learn from the attacks themselves in order to determine the best course of defensive action. However, this method can take weeks or months of 24/7 GPU processing time – which translates into a significant financial and computational burden. The AiR-TK from Khamaseih’s lab supports adversarial training: in fact, it provides the implementation of state-of-the-art adversarial training methods and over 25 adversarial attacks against machine learning and deep learning models. In addition, Air-TK provides a time-saving alternative: a Model Zoo. A Model Zoo is a collection of adversarially pre-trained A.I. models that are readily available for use. Using these pre-trained models can save significant amounts of time for security professionals and researchers to examine the best A.I. defense method against criminal attacks – without needing to train their models over the course of weeks, months, or more using adversarial training methods.

Under Khamaiseh’s leadership, the LAiSR laboratory has released multiple A.I. security tools (the AiR-TK testing framework is the most recent.) LAiSR’s mission is specifically on security amid the new generation of A.I., including generative A.I. such as Diffusion Models and emerging Large Language Models. “It's very hard to find one place where you can find the implementation of adversarial training methods, plus a Model Zoo,” said Khamaseih. But now, with the release of the AiR-TK testing framework, “You can just simply download these pre-trained models and compare your defense mechanism with that.” This opportunity to test and compare future defenses against the baseline defense methods helps the industry and the community – as well as other researchers like Khamaseih and his colleagues.

This latest LAiSR solution comes at a time when attacks to A.I. models are becoming increasingly dangerous. “When it comes to security, it's actually very scary,” said Khamaiseh. “But the good thing about that is, from the A.I. security perspective, you need to have a science foundation, like deep mathematics, to be able to create or invent an attack on A.I. It’s different from the attacks in traditional applications like software. However, adversaries can use the current adversarial attacks to compromise A.I. systems without such knowledge”.

Khamaseih’s focus on this area of research came after he earned his Ph.D. in Cybersecurity and began working in the area of machine learning-based distributed denial-of-service (DDoS) detection and mitigation systems. But one day while driving, Khamaiseh said a new question emerged that he just couldn’t shake. “I said, ‘What would happen if a hacker compromised the A.I. model itself?’ We are so reliant on this model and take it for granted. The model is so honest and kind of secure, and it wasn’t clear to the community that the A.I. model itself will be hacked.” This question led Khamaseih to start new research in the area of adversarial machine learning, where he’s been focused over the past three years. “It fascinates me,” Khamaseih said. “It's very, very complex. I'm very interested.”